Automatic Kubernetes cluster upgrade on Creodias OpenStack Magnum

OpenStack Magnum clusters created in Creodias can be automatically upgraded to the next minor Kubernetes version. This feature is available for clusters starting with version 1.29 of Kubernetes.

In this article we demonstrate an upgrade of a Magnum Kubernetes cluster from version 1.29 to version 1.30.

How the upgrade works automatically in Magnum

Magnum manages Kubernetes clusters using cluster templates and automates the upgrade process through its rolling upgrade mechanism. Starting with 1.29/1.30 version pair, Magnum:

Upgrades the control plane nodes first.

Upgrades worker nodes one by one to minimize downtime.

An extra node is added before a node gets upgraded (on top of the ones that user specified in cluster spec)

Maintains API compatibility during the upgrade and thus ensures that existing workloads continue running smoothly.

It is not possible to skip minor versions when upgrading.

Prerequisites

No. 1 Hosting

You need a Creodias hosting account with Horizon interface https://horizon.cloudferro.com/auth/login/?next=/.

No. 2 Access to the cloud and cluster

Commands openstack and kubectl must be up and running:

How To Install OpenStack and Magnum Clients for Command Line Interface to Creodias Horizon

How To Access Kubernetes Cluster Post Deployment Using Kubectl On Creodias OpenStack Magnum

No. 3 Availability of upgradeable cluster templates (versions 1.29/1.30)

There is a direct correspondence between cluster templates available on Creodias and the Kubernetes versions with the identical minor version numbers:

Upgradeable Kubernetes versions |

calico cluster template |

cilium cluster template |

|---|---|---|

1.29 |

k8s-v1.29.13-1.1.0 |

k8s-v1.29.13-1.1.0-cilium |

1.30 |

k8s-v1.30.10-1.1.0 |

k8s-v1.30.10-1.1.0-cilium |

Note

Upgradeable cluster templates are available on Creodias WAW4-1 region only at the moment of this writing.

Currently, only the 1.29 version of Kubernetes is automatically upgradeable to 1.30. Version 1.30 will become automatically upgradeable to 1.31 when the corresponding versions of cluster templates become available on Creodias.

To test the waters, you can create a new cluster with cilium 1.29 version of template. The command may look like this:

openstack coe cluster create \

--cluster-template k8s-v1.29.13-1.1.0-cilium \

--docker-volume-size 50 \

--labels eodata_access_enabled=false,floating-ip-enabled=true \

--merge-labels \

--keypair sshkey \

--master-count 3 \

--node-count 5 \

--timeout 190 \

--master-flavor eo2a.large \

--flavor eo2a.medium \

cilium-129

No. 4 Ensuring apps compatibility with target K8s version

In this particular case, check Kubernetes 1.30 Release Notes to ensure your applications are compatible. Be sure to always check release notes for the target Kubernetes version you are upgrading the cluster to.

Backup and observe the state of the cluster before the upgrade

We need to compare the state of the cluster

before,

during and

after the upgrade.

Ideally, everything should work right out of the box, however, it is preferable to check and verify.

Kubernetes cluster backup

It is highly recommended to make a backup of your 1.29 cluster before the update. See Backup of Kubernetes Cluster using Velero.

This backup will serve as the state “before” the update.

Monitor the upgrade process with cluster dashboard

The simplest way to observe a cluster is through cluster dashboard. See Using Dashboard To Access Kubernetes Cluster Post Deployment On Creodias OpenStack Magnum.

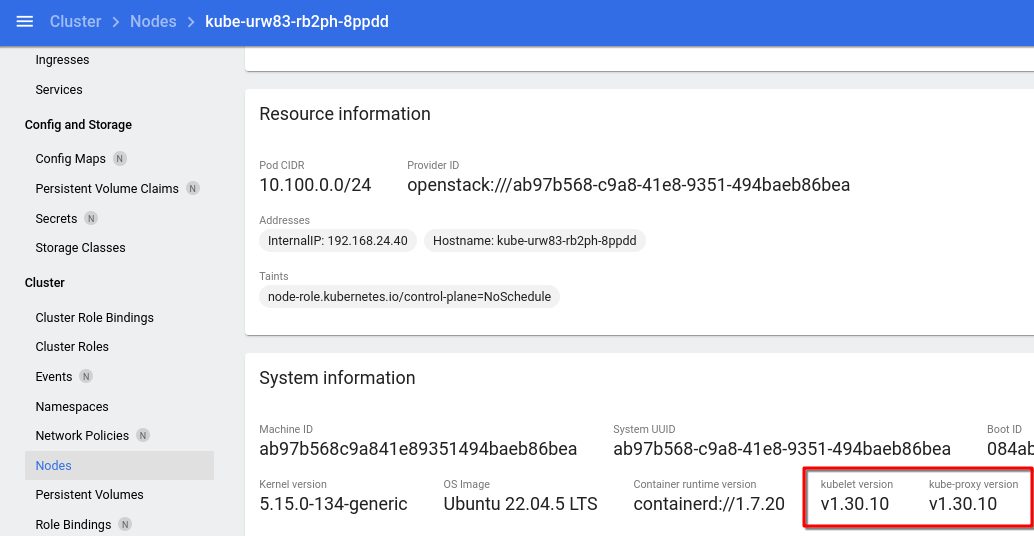

To see nodes versions, select option Nodes in the left side menu, click on a node name and scroll down a bit to find kubelet version:

Other tools for comparisons of clusters might include CI/CD tests, observing cluster with Prometheus and Grafana, storing cluster statistics in a database as a time series and so on.

Prepare the upgrade

Verify cluster version

The following command prints cluster version:

echo $(openstack coe cluster show cilium-129 | awk '/ coe_version /{print $4}')

In this article, the version before the update is 1.29.13.

Identify cluster ID

Retrieve the ID of the Kubernetes cluster you want to upgrade:

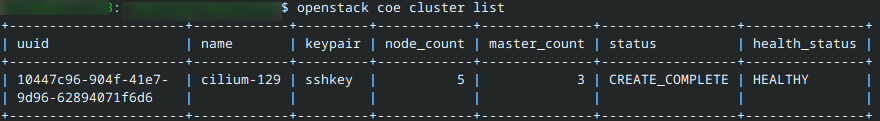

openstack coe cluster list

Example output:

Cluster name is cilium-129 and cluster ID, uuid, is 10447c96-904f-41e7-9d96-62894071f6d6.

In commands that follow, we can use either the name or the uuid of the cluster.

Trigger the upgrade

The command to initiate automatic upgrade is:

openstack coe cluster upgrade <cluster-id> <template-version>

In our case:

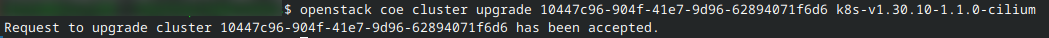

openstack coe cluster upgrade 10447c96-904f-41e7-9d96-62894071f6d6 k8s-v1.30.10-1.1.0-cilium

From table in Prerequisites No. 3 we see that, since cluster cilium-129 was created with template k8s-v1.29.13-1.1.0-cilium, we have to use template k8s-v1.30.10-1.1.0-cilium for upgrade.

In the next row, a message should appear that the upgrade to that cluster has been accepted:

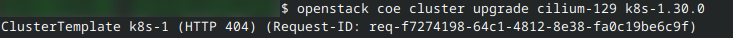

Conversely, if you made a mistake in, say, template name, you would get a 404 message:

Commands to monitor the upgrade progress

A GUI way of monitoring the upgrade process would be using the dashboard for the cluster, as mentioned above. A CLI way would be to issue the specific commands, for example:

Show only the version of the cluster

echo $(openstack coe cluster show cilium-129 | awk '/ coe_version /{print $4}')

During the upgrade, health_status field might temporarily get into UNHEALTHY status, until the upgrade stops. Regardless of that, the cluster remains responsive throughout the entire upgrade process.

Monitor the nodes

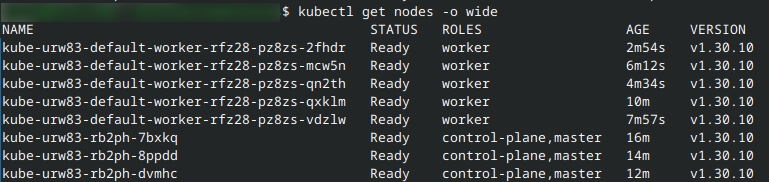

kubectl get nodes -o wide

When all of the nodes are in version 1.30.10, the upgrade is finished, like this:

As a rule of thumb, you can assume that each node will take a few minutes to upgrade. The actual time will depend on way too many factors to list and that consideration is out of scope of this article.

Verify the upgrade

If you performed both

the backup of the old cluster and

upgraded to the next minor Kubernetes version,

you will have two clusters and be able to compare them.

Use the dashboard to compare the results “before” and “after”. Hunt down the eventual differences, learn what they mean and, if needed, resolve them.

If something is still not right, examine whether there are some breaking changes in code from version 1.29 to version 1.30.