How to access EODATA using s3cmd on Creodias

When you want to access EODATA in Creodias cloud, one possibility is to mount it as a file system, which means that the cloud data will look like they are a part of the local system. Another possibility, explored in this article, is to access EODATA repository using s3cmd on a virtual machine hosted on Creodias cloud.

What We Are Going To Cover

Configuring s3cmd for access to the EODATA repository

Exploring the EODATA repository

Downloading files

Prerequisites

No. 1 Account

You need a Creodias hosting account with access to the Horizon interface: https://horizon.cloudferro.com/auth/login/?next=/.

No. 2 Obtained EC2 credentials

You need to obtain credentials used for accessing the EODATA repository. They are not the same as the ones used to access user-created object storage containers. The following article contains more information: How to get credentials used for accessing EODATA on a cloud VM on Creodias

No. 3 A virtual machine

You need a virtual machine hosted on Creodias cloud. This article contains instructions for Ubuntu 22.04. Other operating systems and environments are outside of scope of this article.

To learn how to create a virtual machine hosted on Creodias cloud, you can follow one of these articles:

No. 4 s3cmd installed

You need to have s3cmd installed on your virtual machine. The following article contains information how do to that:

No. 5 Understanding how does s3cmd handle its configuration file

s3cmd stores its configuration in configuration files, one connection per file. You need to decide where you want to keep connection data for the EODATA repository. Learn more here:

Configuring s3cmd for access to the EODATA repository

Throughout this article, we are going to use file eodata-connection in directory /home/eouser as an example configuration file; feel free to adjust the commands according to your needs.

Here is the command to start the process of configuring the s3cmd:

s3cmd -c /home/eouser/eodata-access --configure

You will now get questions regarding your configuration. Answer as explained below, but instead of CLOUDFERRO enter your access key and instead of PUBLIC enter your secret key (if they are different). If you do not know these credentials, follow Prerequisite No. 2. After answering each question, press Enter.

Access Key [access]: CLOUDFERRO

Secret Key [access]: PUBLIC

Default Region [RegionOne]: default

Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3.

S3 Endpoint [eodata.cloudferro.com:] eodata.cloudferro.com

Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be used

if the target S3 system supports dns based buckets.

DNS-style bucket+hostname:port template for accessing a bucket [%(bucket)s.s3.amazonaws.com]:

Encryption password is used to protect your files from reading

by unauthorized persons while in transfer to S3

Encryption password:

Path to GPG program [/usr/bin/gpg]:

When using secure HTTPS protocol all communication with Amazon S3

servers is protected from 3rd party eavesdropping. This method is

slower than plain HTTP, and can only be proxied with Python 2.7 or newer

Use HTTPS protocol [No]: Yes

On some networks all internet access must go through a HTTP proxy.

Try setting it here if you can't connect to S3 directly

HTTP Proxy server name:

Access Key [access]: CLOUDFERRO

Secret Key [access]: PUBLIC

Default Region [RegionOne]: default

Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3.

S3 Endpoint [data.cloudferro.com:] data.cloudferro.com

Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be used

if the target S3 system supports dns based buckets.

DNS-style bucket+hostname:port template for accessing a bucket [%(bucket)s.s3.amazonaws.com]:

Encryption password is used to protect your files from reading

by unauthorized persons while in transfer to S3

Encryption password:

Path to GPG program [/usr/bin/gpg]:

When using secure HTTPS protocol all communication with Amazon S3

servers is protected from 3rd party eavesdropping. This method is

slower than plain HTTP, and can only be proxied with Python 2.7 or newer

Use HTTPS protocol [No]: False

On some networks all internet access must go through a HTTP proxy.

Try setting it here if you can't connect to S3 directly

HTTP Proxy server name:

Access Key [access]: CLOUDFERRO

Secret Key [access]: PUBLIC

Default Region [RegionOne]: default

Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3.

S3 Endpoint [eodata.cloudferro.com:] eodata.cloudferro.com

Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be used

if the target S3 system supports dns based buckets.

DNS-style bucket+hostname:port template for accessing a bucket [%(bucket)s.s3.amazonaws.com]:

Encryption password is used to protect your files from reading

by unauthorized persons while in transfer to S3

Encryption password:

Path to GPG program [/usr/bin/gpg]:

When using secure HTTPS protocol all communication with Amazon S3

servers is protected from 3rd party eavesdropping. This method is

slower than plain HTTP, and can only be proxied with Python 2.7 or newer

Use HTTPS protocol [No]: Yes

On some networks all internet access must go through a HTTP proxy.

Try setting it here if you can't connect to S3 directly

HTTP Proxy server name:

Access Key [access]: CLOUDFERRO

Secret Key [access]: PUBLIC

Default Region [RegionOne]: default

Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3.

S3 Endpoint [eodata.cloudferro.com:] eodata.cloudferro.com

Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be used

if the target S3 system supports dns based buckets.

DNS-style bucket+hostname:port template for accessing a bucket [%(bucket)s.s3.amazonaws.com]:

Encryption password is used to protect your files from reading

by unauthorized persons while in transfer to S3

Encryption password:

Path to GPG program [/usr/bin/gpg]:

When using secure HTTPS protocol all communication with Amazon S3

servers is protected from 3rd party eavesdropping. This method is

slower than plain HTTP, and can only be proxied with Python 2.7 or newer

Use HTTPS protocol [No]: Yes

On some networks all internet access must go through a HTTP proxy.

Try setting it here if you can't connect to S3 directly

HTTP Proxy server name:

New settings:

Access Key: CLOUDFERRO

Secret Key: PUBLIC

Default Region: default

S3 Endpoint: eodata.cloudferro.com

DNS-style bucket+hostname:port template for accessing a bucket: %(bucket)s.s3.amazonaws.com

Encryption password:

Path to GPG program: /usr/bin/gpg

Use HTTPS protocol: True

HTTP Proxy server name: _____

HTTP Proxy server port: 0

Once you have provided answers to all the questions, you should be asked whether you want to test access:

Test access with supplied credentials? [Y/n]

Reply with Y and press Enter.

Testing should take no more than a couple of seconds. Once the process is completed, you should get an appropriate confirmation and a question whether to save the settings or not:

Please wait, attempting to list all buckets...

Success. Your access key and secret key worked fine :-)

Now verifying that encryption works...

Not configured. Never mind.

Save settings? [y/N]

Answer with Yes and press Enter. You should get confirmation similar to this:

Configuration saved to '/home/eouser/eodata-access'

You should now be returned to the command prompt.

Exploring the EODATA repository

To list available containers, execute this command:

s3cmd -c /home/eouser/eodata-access ls

The output should show containers named DIAS and EODATA. They both serve as a way to access the EODATA repository, you can choose whichever you want. In this example, we are going to use the container called EODATA.

2017-11-15 10:40 s3://DIAS

2017-11-15 10:40 s3://EODATA

Other containers might also appear here but dealing with them is outside of scope of this article.

List contents of the s3://EODATA directory by executing this command:

s3cmd -c /home/eouser/eodata-access ls s3://EODATA/

You should get output similar to this:

DIR s3://EODATA/C3S/

DIR s3://EODATA/CAMS/

DIR s3://EODATA/CEMS/

DIR s3://EODATA/CLMS/

DIR s3://EODATA/CMEMS/

DIR s3://EODATA/Envisat/

DIR s3://EODATA/Envisat-ASAR/

DIR s3://EODATA/Global-Mosaics/

DIR s3://EODATA/Jason-3/

DIR s3://EODATA/Landsat-5/

DIR s3://EODATA/Landsat-7/

DIR s3://EODATA/Landsat-8/

DIR s3://EODATA/SMOS/

DIR s3://EODATA/Sentinel-1/

DIR s3://EODATA/Sentinel-1-COG/

DIR s3://EODATA/Sentinel-1-RTC/

DIR s3://EODATA/Sentinel-2/

DIR s3://EODATA/Sentinel-3/

DIR s3://EODATA/Sentinel-5P/

DIR s3://EODATA/Sentinel-6/

DIR s3://EODATA/auxdata/

You can explore different directories in this way. For instance, to list the contents of the Sentinel-1 directory, execute this command:

s3cmd -c /home/eouser/eodata-access ls s3://EODATA/Sentinel-1/

The output should look like this:

DIR s3://EODATA/Sentinel-1/AUX/

DIR s3://EODATA/Sentinel-1/SAR/

Ending paths with slash while using s3cmd ls

While listing directories using the s3cmd ls commands, make sure to finish the paths with a slash. If you execute this command to list the contents of the CLMS directory:

s3cmd -c /home/eouser/eodata-access ls s3://EODATA/CLMS/

you should get output which contains what you are looking for:

DIR s3://EODATA/CLMS/Global/

DIR s3://EODATA/CLMS/Imagery_and_reference_data/

DIR s3://EODATA/CLMS/Local/

DIR s3://EODATA/CLMS/Pan-European/

2021-10-08 07:42 0 s3://EODATA/CLMS/

If you, however, omit the slash in the end:

s3cmd -c /home/eouser/eodata-access ls s3://EODATA/CLMS

your output will only contain your request with added slash in the end:

DIR s3://EODATA/CLMS/

Listing contents of a product

To list all contents of the product S5P_OFFL_L1B_RA_BD8_20230220T011032_20230220T025202_27746_03_020100_20230220T043635, execute this command:

s3cmd -c /home/eouser/eodata-access ls s3://EODATA/Sentinel-5P/TROPOMI/L1B/2023/02/20/S5P_OFFL_L1B_RA_BD8_20230220T011032_20230220T025202_27746_03_020100_20230220T043635/

The output should look like this:

2023-02-20 07:06 0 s3://EODATA/Sentinel-5P/TROPOMI/L1B/2023/02/20/S5P_OFFL_L1B_RA_BD8_20230220T011032_20230220T025202_27746_03_020100_20230220T043635/

2023-02-20 07:06 132968 s3://EODATA/Sentinel-5P/TROPOMI/L1B/2023/02/20/S5P_OFFL_L1B_RA_BD8_20230220T011032_20230220T025202_27746_03_020100_20230220T043635/S5P_OFFL_L1B_RA_BD8_20230220T011032_20230220T025202_27746_03_020100_20230220T043635.cdl

2023-02-20 07:06 2156950172 s3://EODATA/Sentinel-5P/TROPOMI/L1B/2023/02/20/S5P_OFFL_L1B_RA_BD8_20230220T011032_20230220T025202_27746_03_020100_20230220T043635/S5P_OFFL_L1B_RA_BD8_20230220T011032_20230220T025202_27746_03_020100_20230220T043635.nc

Downloading files

Example 1: Downloading a single file

To download a file, use the s3cmd get command and provide the directory of a file. For example, to download the file called S5P_OPER_AUX_CTMANA_20230812T000000_20230813T000000_20230820T143624.cdl from the product called S5P_OPER_AUX_CTMANA_20230812T000000_20230813T000000_20230820T143624, execute this command:

s3cmd -c /home/eouser/eodata-access get s3://EODATA/Sentinel-5P/AUX/AUX_CTMANA/2023/08/12/S5P_OPER_AUX_CTMANA_20230812T000000_20230813T000000_20230820T143624/S5P_OPER_AUX_CTMANA_20230812T000000_20230813T000000_20230820T143624.cdl

The download process should begin. The file should be saved to your current working directory.

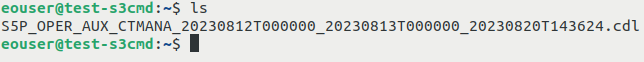

After the process was finished, you can use the ls command to see the file which was downloaded:

Example 2: Downloading a full product

To download all files from a product, use s3cmd get command with the -r parameter, which allows you to perform recursive downloads. For instance, to download all files from product S5P_OFFL_L1B_RA_BD8_20230220T011032_20230220T025202_27746_03_020100_20230220T043635, execute this command:

s3cmd -c /home/eouser/eodata-access get -r s3://EODATA/Sentinel-5P/TROPOMI/L1B/2023/02/20/S5P_OFFL_L1B_RA_BD8_20230220T011032_20230220T025202_27746_03_020100_20230220T043635

The download process should begin. The files should be saved to your current working directory.

If you end the directory in the command above with a slash, the contents of that directory will be put to your current working directory. The directory itself will not be downloaded. Therefore, if you want to download the directory with its content, do not end the path with a slash.

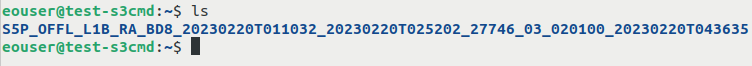

After the process was finished, you can use ls command to see the files which were downloaded:

Using the sync command

s3cmd can also make sure that a directory on your virtual machine contains the exact same content as a directory in the EODATA repository. If local directory does not contain some or all of the files from the remote location, they will be downloaded.

Downloading a product

Let’s assume that you want to download the product called S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224 from the EODATA repostiory to the local hard drive and make sure that even after our modifications it remains the same.

First, create a directory for that product - in this example it will be named product-path

mkdir product-path

To download the product there using sync command, execute this command:

s3cmd -c /home/eouser/eodata-access sync s3://EODATA/Sentinel-5P/TROPOMI/L1B_RA_BD1/2018/04/30/S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224 ./product-path

Explanation of this command:

s3cmd is the name of the program we are using

-c /home/eouser/eodata-access is a way to pass the configuration file

sync is the name of the command we are executing

s3://EODATA/Sentinel-5P/TROPOMI/L1B_RA_BD1/2018/04/30/S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224 is the remote location from which we are pulling files, in this case it is one of the products

./product-path is the local path to which we are downloading the file

The output should contain information about download progress, like so:

download: 's3://EODATA/Sentinel-5P/TROPOMI/L1B_RA_BD1/2018/04/30/S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224/S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224.cdl' -> './product-path/S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224/S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224.cdl' [1 of 2]

138436 of 138436 100% in 0s 3.09 MB/s done

download: 's3://EODATA/Sentinel-5P/TROPOMI/L1B_RA_BD1/2018/04/30/S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224/S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224.nc' -> './product-path/S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224/S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224.nc' [2 of 2]

468728375 of 468728375 100% in 3s 145.92 MB/s done

Done. Downloaded 468866811 bytes in 3.1 seconds, 143.71 MB/s.

Once the operation is completed, you can navigate to the directory called product-path using

cd product-path

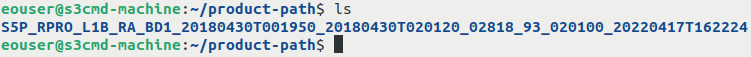

List its contents using the ls command to see the downloaded directory containing the product:

Navigate to the directory:

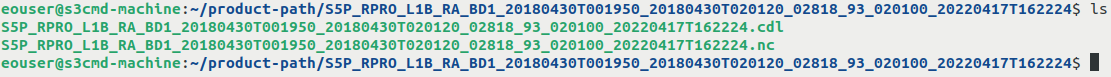

cd S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224/

List its contents using ls:

Syncing missing files

To further test the sync command, you can remove one of the downloaded files. Let that be file S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224.cdl

rm -i ./S5P_RPRO_L1B_RA_BD1_20180430T001950_20180430T020120_02818_93_020100_20220417T162224.cdl

Using -i parameter is not mandatory as it will only ask you for confirmation whether you want to remove the file.

To approve, answer the prompt for deletion with y and press Enter.

Return to the directory from which you originally executed the sync command:

cd ../..

Execute the sync command the same way you did previously. The missing file should be downloaded.

If the user has modified downloaded files, the sync command without parameters should restore the files that have their counterparts in the EODATA repository. And vice versa: the files that are added locally and do not originate from the cloud, will be left intact.

What To Do Next

s3cmd can also be used to access object storage from Creodias.

Learn more here: How to access object storage from Creodias using s3cmd