Managed Kubernetes Backups on Creodias

What We Are Going to Cover

The importance of Kubernetes backups

Kubernetes backups are relevant in many scenarios:

Disaster recovery — restore a broken cluster to a known-good state.

Migration — move workloads between clusters.

Application rollback — undo failed deployments or upgrades.

Testing and development — clone production workloads into a test cluster.

Compliance — maintain historical snapshots for audit purposes.

A proper Kubernetes cluster backup should capture the complete state of the cluster—including default Kubernetes resources, custom resources, and the interactions between them—so that the cluster can be quickly and reliably re-deployed if needed. In addition to cluster state, it’s also important to consider persistent volumes and their backups, since application data often resides there.

What is Velero

To solve this challenge, the Kubernetes community uses Velero, an open-source tool with Apache License 2.0. It

Backs up cluster objects and persistent volumes, and restores them when needed

Stores backups in S3-compatible object storage for portability and cost efficiency

Leverages Kubernetes-native resources to declare and reconcile backup state

In Creodias Managed Kubernetes, the Managed Kubernetes Backups feature installs the Velero operator on the cluster automatically and provides a friendly graphical interface to manage backups.

This means you can enable and schedule backups without writing YAML or managing Velero directly. Under the hood, however, the familiar Velero mechanisms are at work — and when you need to restore a backup, you will use the Velero CLI to drive the process.

Backup options

You may configure and run Velero cluster backups yourself using Velero CLI directly. Alternatively, Creodias Managed Kubernetes allows to enable the Velero backups in a simple manner with a predefined subset of features. Under the hood, this feature enables a Velero operator on the cluster.

The table below summarizes the differences between the two approaches so you can choose the right one for your workflow.

Prerequisites

No. 1 Hosting account on Creodias

To use Creodias Managed Kubernetes, you need your

general Creodias account as well as the link to

https://managed-kubernetes.creodias.eu to access the dashboard.

No. 2 A Managed Kubernetes cluster with kubectl access

If you need to create one, see How to create a Kubernetes cluster using the Creodias Managed Kubernetes launcher GUI.

This article assumes a running Creodias Managed Kubernetes cluster that you can administer and connect to with kubectl. Export your kubeconfig and secure it:

export KUBECONFIG=/path/to/your/config

chmod 600 "$KUBECONFIG"

kubectl get nodes

If kubectl cannot reach the cluster, fix this before proceeding.

No. 3 Velero CLI on your workstation

You will need to install the Velero CLI if you need to perform advanced operations e.g. restoring the backup initiated from Managed Kubernetes.

The versions of Velero on the provisioned Managed Kubernetes clusters may change over time.

If you already had Velero installed locally, you could simply run:

velero version

This shows both the client version (local) and the server version (on the cluster). The two must match — otherwise some resources (especially CRDs) may fail to restore cleanly.

The solution to this chicken-and-egg situation is to

install a Velero version that is known to work (here we use v1.16.1),

check the server version, and then

re-install if necessary.

curl -LO https://github.com/vmware-tanzu/velero/releases/download/v1.16.1/velero-v1.16.1-linux-amd64.tar.gz

tar -xzf velero-v1.16.1-linux-amd64.tar.gz

sudo mv velero-v1.16.1-linux-amd64/velero /usr/local/bin/velero

Now verify versions:

velero version

Client:

Version: v1.16.1

Server:

Version: v1.16.1

If the versions don’t match, repeat the installation with the exact version shown by the server.

Note

By default, Velero runs in the velero namespace. Unless otherwise specified, all backups and restores will be created and managed there.

No. 4 Object storage (S3) readiness

Creodias Managed Kubernetes Backups store data in S3-compatible object storage.

When you enable backups in the Creodias Managed Kubernetes UI, the platform generates the bucket, access key, secret key, and endpoint for you.

Costs: stored bytes and egress follow the price list of the cloud/region where the cluster is provisioned.

Optional (advanced): if you plan to point a different cluster to an existing backup location, keep the access key, secret key, bucket name, and S3 endpoint at hand.

For an introduction to object storage, see How to use Object Storage on Creodias.

No. 5 Matching Kubernetes version (for cross-cluster restore)

If you plan to restore a backup onto a new cluster, create the destination Creodias Managed Kubernetes cluster with the same Kubernetes minor version as the source. Cross-version restores may work but are not guaranteed for all CRDs.

Before you start — quick checklist

You can reach the cluster with kubectl (kubectl get nodes works).

(for testing the restore feature) Velero CLI is installed locally: velero version works

Environment notes

Creodias Managed Kubernetes automatically installs and manages the in-cluster Velero setup when backups are enabled.

Do not modify Velero resources directly with kubectl or velero for routine tasks (such as schedules, retention, or volume settings), because Creodias Managed Kubernetes will overwrite them.

If you need a fully custom Velero configuration, first disable Creodias Managed Kubernetes Backups and then deploy your own Velero instance separately.

To directly inspect backup objects, you can install an S3 client on your workstation, such as boto3 or s3cmd.

The cluster must have at least one worker node running for the backup process to operate. To delete the last node pool from a cluster, you must first disable backups.

Volume backup considerations

Velero can back up Persistent Volume Claims (PVCs) in addition to Kubernetes resources. Be aware that:

Backups of large volumes may take minutes or hours to complete because files are copied into S3.

To ensure consistency, schedule backups during low activity or quiesce applications (e.g. database flush/lock, maintenance mode).

When restoring, the backup will only reflect the state at the moment of capture — any writes happening during the process may be lost.

Enable cluster backups on the cluster

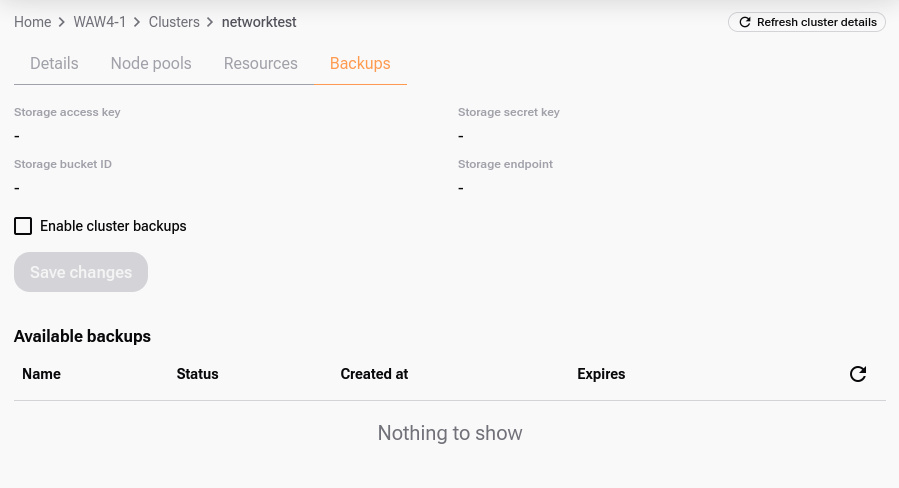

Click on the cluster in the cluster list, go to tab “Backups”.

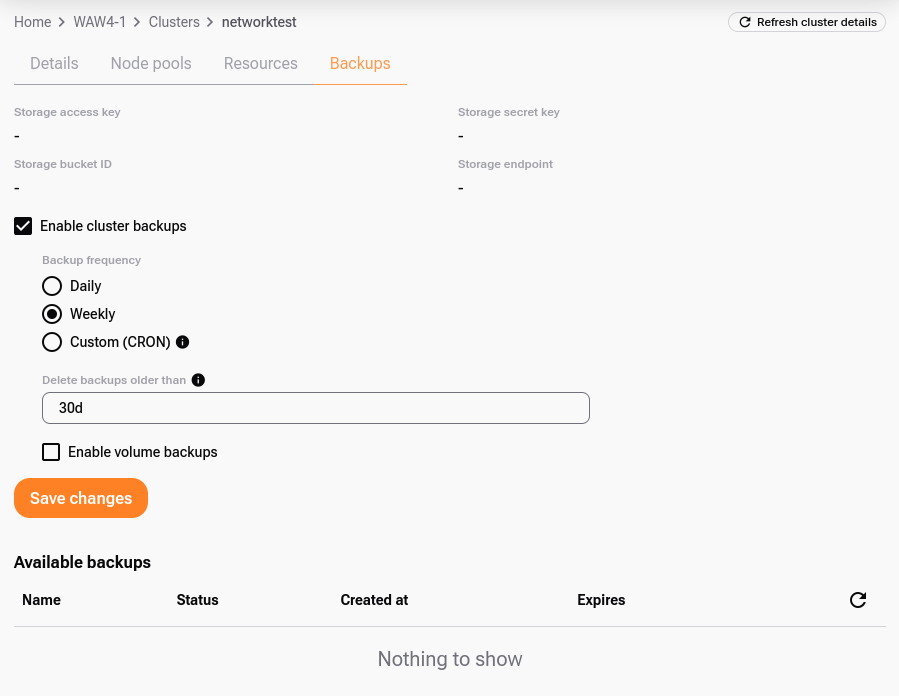

Select the checkbox “Enable cluster backups”. An additional dialog appears:

You can customize the default setting:

Select the desired interval of backups (daily, weekly or custom - cron based).

Select the period after which historical backups will be automatically erased.

Define if Persistent Volume Claims (PVCs) should also be captured by the backup.

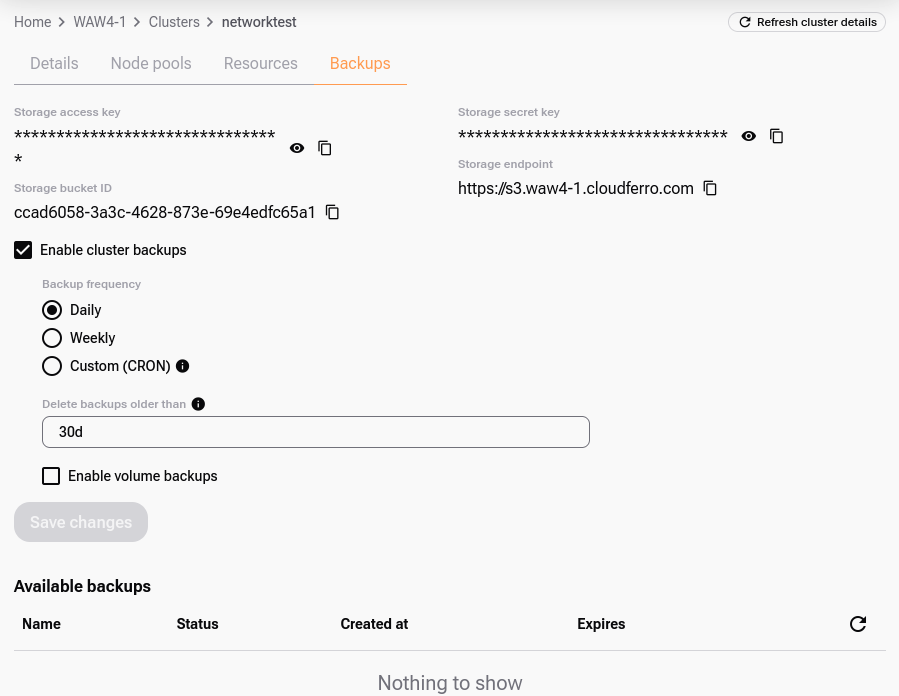

When ready, click Save Changes. The platform will generate the access key, secret key, bucket ID, and S3 endpoint:

Save all four values for future reference.

The backups will be stored in this bucket in your cluster’s project as files in Velero format. You can access them with the Velero CLI (described below) once your kubeconfig points to the cluster.

Alternatively, you can access them directly over S3 using the endpoint and keys.

How to create your first cluster backup

Caution

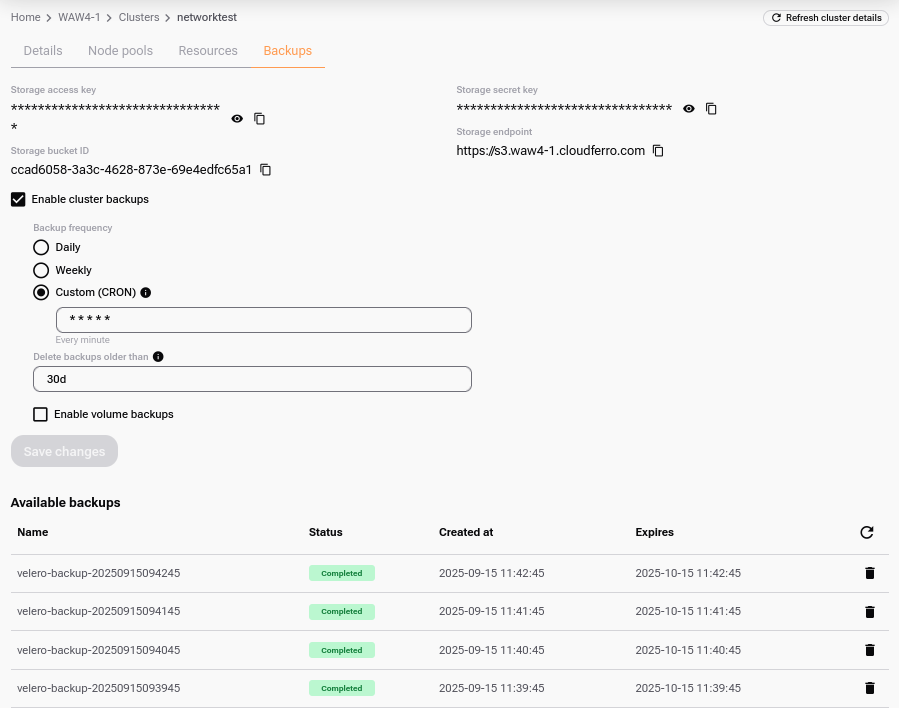

If you want to see a cluster backed up right away just for testing the feature, use the third option, Custom (CRON) with five asterisks as the input:

That will create a CRON job that repeats each minute. On the positive side, it will create the backup immediately, and on the negative side, it will continue to do so, which can quickly exhaust your project’s storage quota.

Warning

Once you have created a new backup for testing purposes, switch back to a more reasonable production setting e.g. Daily or Weekly.

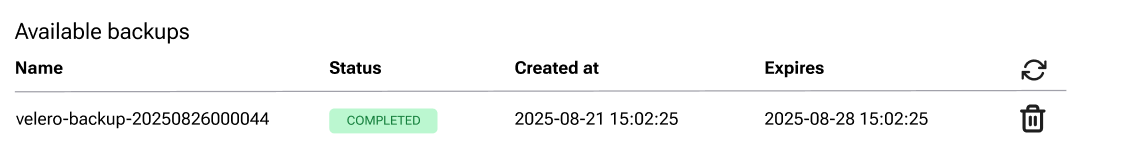

You will see the backups in the list Available backups at the bottom of the screen.

Clicking on the backup name will redirect to the YAML definition of this specific backup in the Cluster-Resources tab.

Change the backup settings

You can change the backup settings via modifying the backup parameters like frequency, retention and whether to include volume backups.

These settings only affect future backups, the historic backups will not be changed.

List and delete backups

When a backup completes, it appears in the list at the bottom of the Backups screen. Therefore, listing the available backups is automatic when using GUI:

Delete using the GUI

Review available backups in the Backups tab.

If needed, click a backup name to view its YAML in Cluster-Resources.

Click the recycle bin icon to delete an individual backup.

About deleting with CLI (optional)

Note

With Creodias Managed Kubernetes backups, the GUI is sufficient for enabling, scheduling, and deleting backups. You typically need the Velero CLI only for restore workflows.

To remove older backups in bulk:

Keep Enable cluster backups turned on so Velero can manage them.

Delete the individual backups you don’t need.

Then disable backups if you no longer want new ones to be created.

Restore a backup on the existing cluster

Restoring the backup on the existing cluster is a valid scenario when the cluster is functioning properly and you want to revert to a previously captured state.

To restore the backup you will need Velero CLI tool on your local machine (see Prerequisite No. 3).

Ensure you have your Velero CLI running in the context of this cluster (e.g. via export KUBECONFIG env. variable). Also ensure the cluster backups are enabled on this cluster. Then to restore from a specific backup e.g. velero-backup-20250915093400, run the following command:

velero restore create restore-backup-20250915-01 \

--from-backup velero-backup-20250915093400

Unless you specify --namespace, Velero assumes the velero namespace.

Under the hood, this command creates Velero’s Restore custom resource. Velero operator installed on the cluster reconciles the cluster to the state defined in this resource.

For more details see Velero documentation. You might use additional flags to customize in detail how the restore is performed.

To check restore progress and details, run:

velero restore describe restore-backup-20250915-01

velero restore logs restore-backup-20250915-01

Restore a backup on a new cluster

Sometimes the original cluster (Cluster A) may no longer be accessible.

In such a case, you can restore its backups onto a new cluster (Cluster B).

Key ideas to keep in mind:

Cluster B must run the same Kubernetes minor version as Cluster A.

Velero CLI must point to Cluster B — so your kubeconfig context must be switched.

Velero needs access to an S3 bucket containing the backups. In most cases this is the bucket from Cluster A, but it could also be another S3 location (for example, if you saved backups elsewhere before deleting Cluster A).

—

Step 1 — Point CLI to Cluster B

From the GUI, copy the kubeconfig file from Cluster B’s Cluster Details and store it under the name clusterB_config.yaml.

export KUBECONFIG=/path/to/clusterB_config.yaml

kubectl cluster-info

velero version

This ensures that all following commands target Cluster B.

—

Step 2 — Provide S3 credentials

Velero must authenticate to the S3 bucket that holds the backups.

To store the bucket’s credentials, create a Kubernetes secret containing your keys:

kubectl create secret generic aws-s3-credentials \

--namespace velero \

--from-literal=aws_access_key_id=YOUR_ACCESS_KEY \

--from-literal=aws_secret_access_key=YOUR_SECRET_KEY

This secret will later be referenced by the BackupStorageLocation.

—

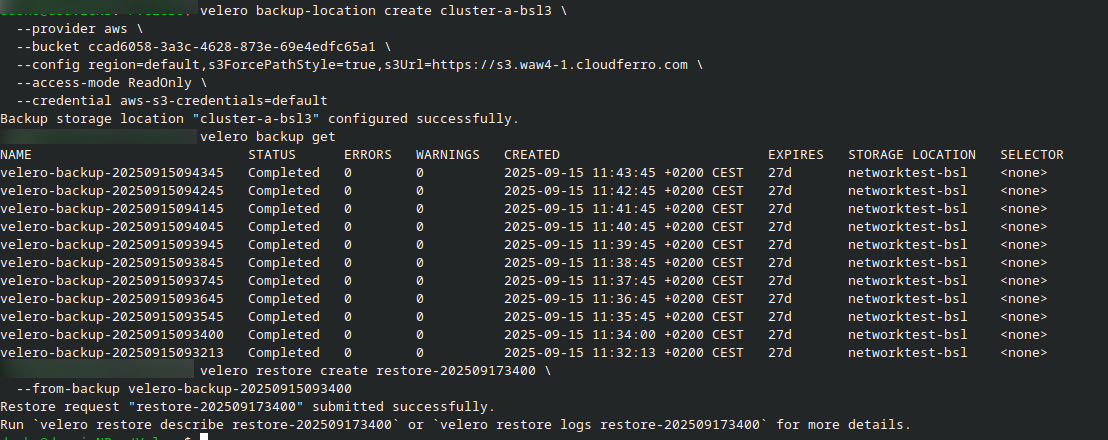

Step 3 — Register the backup location

Now create a new a Velero custom resource called BackupStorageLocation on Cluster B. This resource points to the S3 bucket where backups are stored.

Important

Always create a new BackupStorageLocation (e.g. cluster-a-bsl). Do not overwrite the default location in Cluster B — it will be reset whenever backup settings are updated in the GUI.

Example command (read-only mode):

velero backup-location create cluster-a-bsl \

--provider aws \

--bucket YOUR_BUCKET_ID \

--config region=default,s3ForcePathStyle=true,s3Url=https://s3.waw4-1.cloudferro.com \

--access-mode ReadOnly \

--credential aws-s3-credentials=default

Replace YOUR_BUCKET_ID with the actual bucket coordinates.

Verify that backups are visible:

velero backup get

(All backups are stored in the velero namespace by default.)

—

Step 4 — Restore the backup

Choose one backup from the list and restore it:

velero restore create restore-202509173400 \

--from-backup velero-backup-20250915093400

To monitor the restore:

velero restore describe restore-202509173400

velero restore logs restore-202509173400

Note for restoring Services of type LoadBalancer

When you restore a Service of type LoadBalancer with Velero — whether onto a new cluster or after the Service was deleted — the restored object will still reference the old, non-existent LoadBalancer. Because of this, the Service will not be functional immediately after the restore.

To allow the cloud controller to provision a fresh LoadBalancer with a new floating IP, you must remove the old OpenStack-specific annotations from the Service.

For example, if your Service is named nginx, run:

kubectl annotate service nginx loadbalancer.openstack.org/load-balancer-address-

kubectl annotate service nginx loadbalancer.openstack.org/load-balancer-id-

After a short while, a new LoadBalancer will be created and the Service will become available again.

Caveats

When you delete the cluster, the backups linked to this cluster will be deleted as well. If you wish to keep such backups, copy them to your own, external location (e.g. you could use S3 buckets available from your OpenStack project).

The Cluster Backups must be enabled to perform any operations involving the backups e.g. deleting historic backups (under the hood, turning on Cluster Backups ensures that Velero is active on the cluster).

We strongly recommend not to use Velero CLI to tweak the settings of ClusterBackups initiated from Managed Kubernetes (GUI, API etc.). These settings will be overwritten when backup configuration is updated. If you prefer a custom setup, keep the backups feature disabled and install your own Velero operator on the cluster, with your preferred settings.